The Challenge of Understanding Technical Diagrams: Few Specifications, Many Exceptions

By Jo-Anne Ting

Reading and understanding technical schematic diagrams is something that theoretically is straightforward, given there are common standards such as ISO for symbols and naming conventions. Legends help to decrypt exceptions to common rules. But what happens when you get diagrams that contain exceptions without accompanying legend sheets?

This is the challenge faced by DataSeer and any Machine Learning (ML) software product in situations where specifications are few but exceptions many.

Requirements change as data changes

As we’ve onboarded more users onto DataSeer, we’ve started to see more exceptions to common P&ID conventions. It’s not to say that our initial assumptions are wrong but that the scope for types of symbols and naming conventions has now expanded to include more variations. Similar to an artist’s creative freedom, industrial design and drafting teams have flexibility when creating new drawings including the interpretation of industry best practices and standards. This is especially prevalent in older, legacy diagrams that were created before global standard symbol libraries were introduced in design software.

For example, DataSeer users sometimes upload P&IDs that have valve IDs such as BAV-1234 (where BAV indicates a ball valve) and other times, valves have IDs that look like line numbers (3”-BAV1234-PXJ).

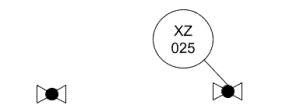

Another example of an exception is one where compound symbols are used to represent one semantic entity. Figure 1 below shows two representations of a globe valve: a standard one following ISO (on left) and a non-conventional one (on right) that includes an attached label. The circle shape can represent a label and/or a stand-alone instrument, and the meaning is derived from the diagram context.

The training and testing process that makes ML unique from other subfields of Artificial Intelligence (AI) hinges on future data being similar to historically observed data. As a result, when requirements start to expand, this means algorithms and ML models implemented in the product need to be adjusted.

Software quality suffers with changing requirements

Changing requirements are the bane of software developers, leading to potential dangers such as release delays, bugs, performance issues, memory leaks, and unwieldy code that’s hard to maintain and scale. An if-else statement with copious else clauses is the textbook example of spaghetti code and one of the downfalls of software products.

Improving algorithmic performance by getting more specific

The key to improving algorithmic performance of an ML software product is not always to come up with a “better” model. This is true especially when the future is yet unknown. So what can we do while we wait and observe what the future looks like?

This conundrum is hinted at in this MIT Technology Review article from November 2020 that describes how a group of 40 researchers across different teams at Google reported “underspecification” as the main reason why ML models fail “in the wild”. Underspecification is not the same thing as a data shift between training and test data. Instead, it implies that even if the training process can produce a “good” model, it wouldn’t know the difference between that and a “bad” one.

One way of avoiding spaghetti code while ensuring acceptable algorithmic performance is to expose more control to the user in the UI/UX in order to get more specificity on the data requirements. For DataSeer, this could mean prompting users to define specific naming or symbol standards for their diagrams. This could be done via upload of a legend sheet, if available, or, if not, individually labeled examples of symbols and naming conventions by the user.

Gathering more accurate requirements is a tricky process though, since these requirements are only discovered when ML models and algorithms fail. Nevertheless, pinning down specificity is a stopgap for a ML software product until more data is observed such that models can be updated (that’s a topic for a future article – when should models be updated?).

Summary

ML software products face a challenge of under specification in domains where there are few specifications but many exceptions. Changes in data lead to requirements that lead to an expansion in scope and require updates to models and algorithms. In such scenarios, it’s important to not fall into the trap of spaghetti code. In DataSeer, we recognize the impossible task of training the system to identify and understand every possible symbol library so we give the user some control over the problem specificity via the UI/UX as a bridge while the system continues to observe all the different data variations we can expect from users.